How Did We Get Here?

Archival Process

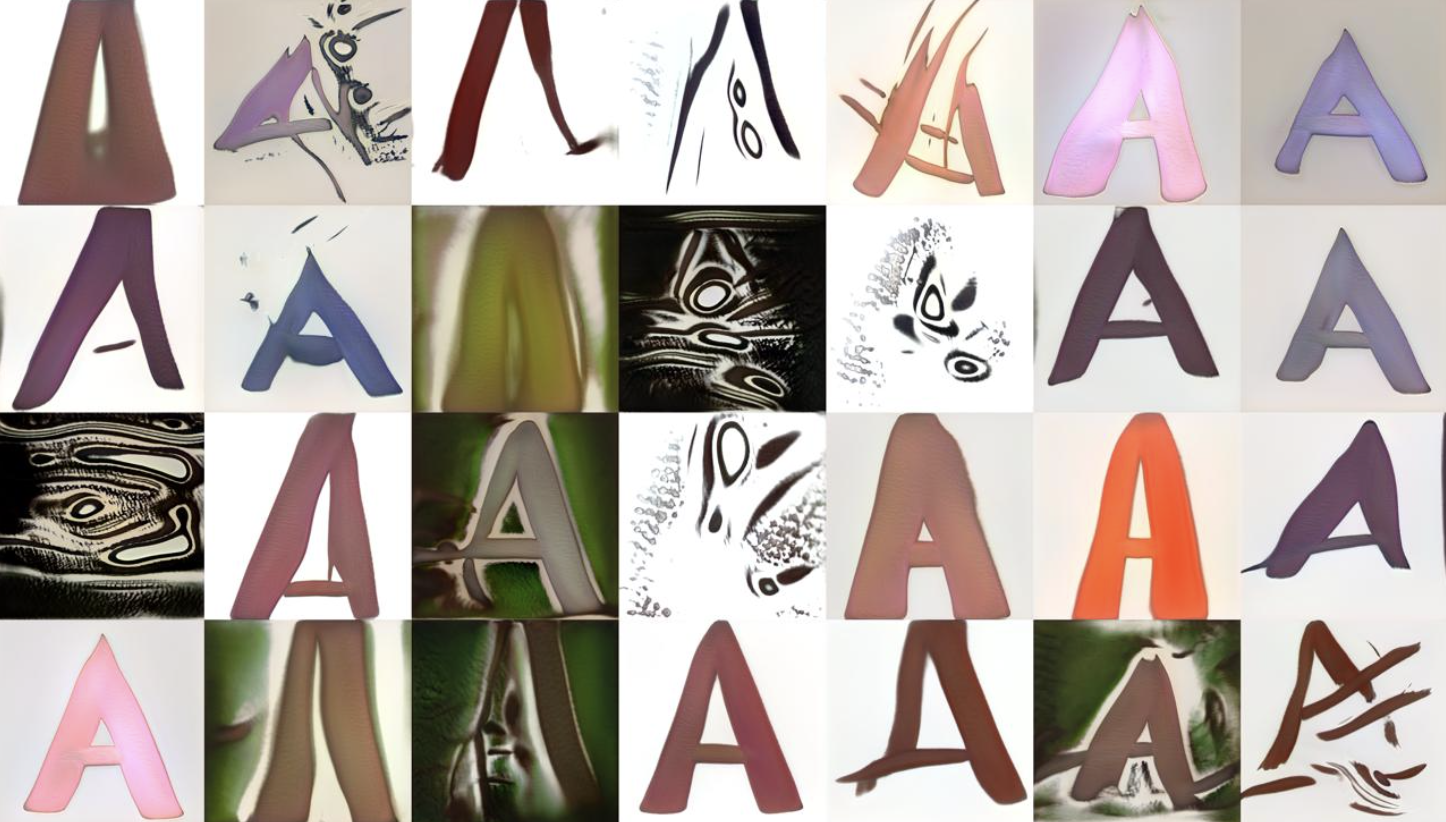

Before we started anything, we went to test out the AI system in Runway ML Lab, a major tool used in our

project. We started of with the most simplest of letters. The letter A. We were unsure how the interface

and software works, so we archived 50 images of the letter A for the AI system to generate its own output

on the letter, which are generative images and a morpjhing video called a "Latent Walk Video"

Runway ML Lab is an online software which has a machine learning algorithm that trains patterns within a

given dataset of images, using a remote GPU.

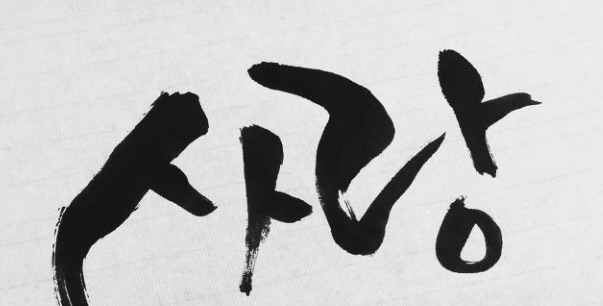

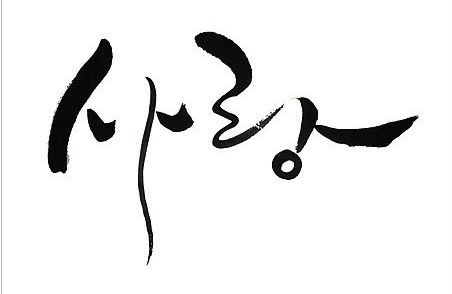

How do we communicate Love?

Coming from different cultural backgrounds, we archived images of different linguistic interpretation of love. We used languages that we have a connection to. Seoyeon would be Korean. Rachel would be Mandarin. Fatih would be Arabic.

/arabic.jpg)

/arabic.gif)

/chinese.jpeg)

/chinese.gif)

/korean.jpeg)

/korean.gif)

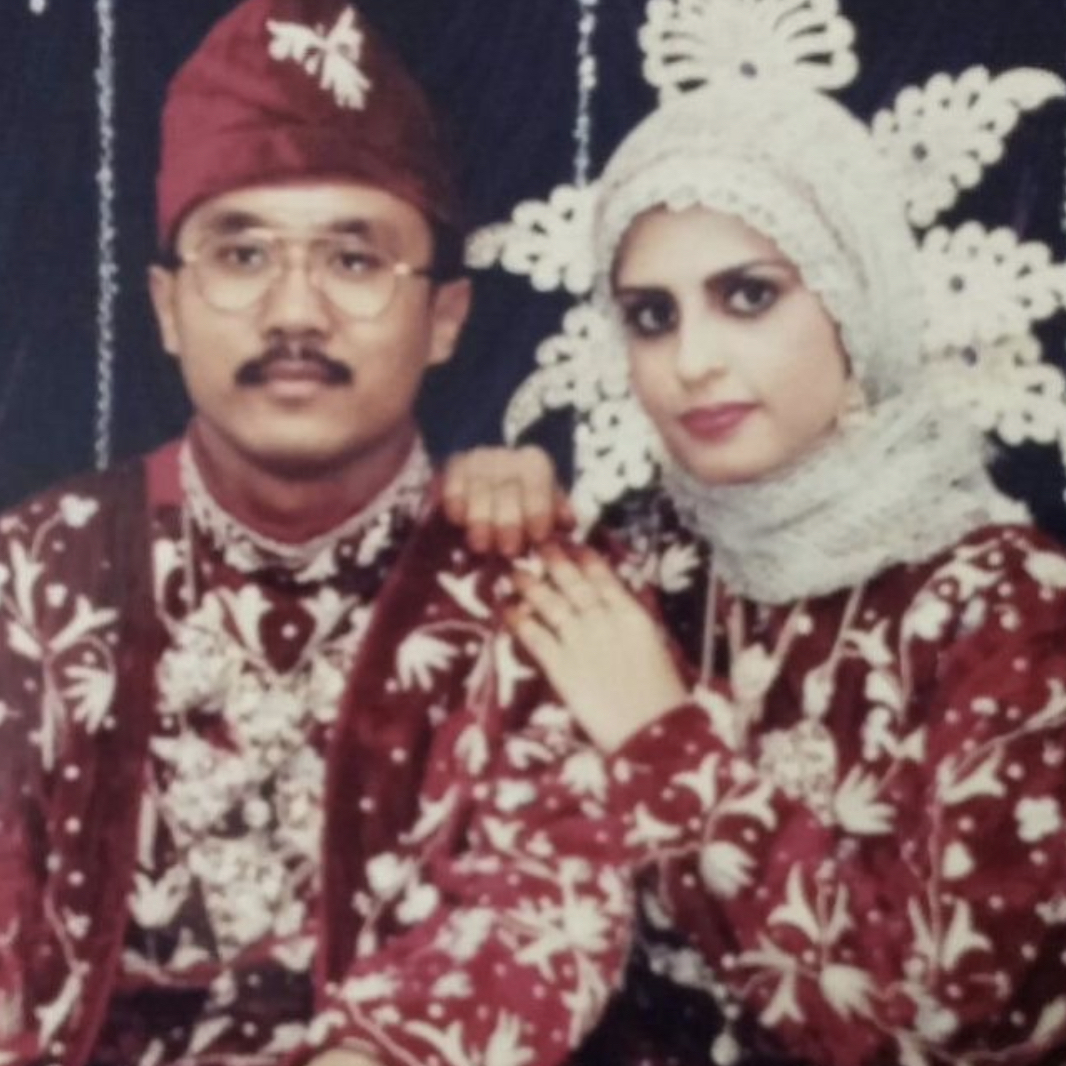

How do we visualise Love?

Love is subjective and everyone perceives love differently. So we made it personal to us, and archived images of what images symbolises and means love to us. Does AI know that these lines of code that make this images actually symbolises love to us?

/fatihlove.jpg)

/fatihlove.gif)

/rachellove.jpeg)

/rachellove.gif)

/seoyeonlove.jpeg)

/seoyeonlove.gif)

Let's have a Conversation.

We went on to push the limitations of Artificial Intelligence, so we decided to have a conversation about Love, with zero expectations, just driven by pure curiosity. We decided to used 3 different online AI chats for different conversations.